In this article, we will take a deep dive into Spring Batch Job Configuring and Running the Job and its various flavors. In the last two articles, we have touched upon basics of spring batch and creation of spring batch basic application.

Spring Batch Job Configuring

We have seen the overall architecture for a spring batch in article 1 and we have observed that a spring batch job seems like a straight-forward container for various steps involved in the job, but as a developer, we must know various job configurations. Also, we need to understand how a job will be run and how do we manage its metadata.

In this tutorial, we will try to understand configuring Job, JobRepository, JobLauncher, and how to run a batch Job from the command line.

1. Configuring a Spring Batch Job

The Job is a Java interface, and it has implementation like AbstractJob, FlowJob, GroupAwareJob, JsrFlowJob, and SimpleJob. A JobBuilderFactory (builder design pattern) abstracts this implementation and returns a Job object.

Java Configuration

@Configuration

public class SpringBatchConfig {

@Autowired

public JobBuilderFactory jobBuilderFactory;

@Autowired

public StepBuilderFactory stepBuilderFactory;

@Bean

public Job processJob() {

return jobBuilderFactory.get("stockpricesinfojob")

.incrementer(new RunIdIncrementer())

.listener(new SpringBatchJobExecutionListener())

.flow(StockPricesInfoStep())

.end()

.build();

}

@Bean

public Step StockPricesInfoStep() {

return stepBuilderFactory.get("step1")

.listener(new SpringBatchStepListener())

. < StockInfo, String > chunk(10)

.reader(reader())

.processor(stockInfoProcessor())

.writer(writer())

.faultTolerant()

.retryLimit(3)

.retry(Exception.class)

.build();

}

@Bean

public FlatFileItemReader < StockInfo > reader() {

return new FlatFileItemReaderBuilder < StockInfo > ()

.name("stockInfoItemReader")

.resource(new ClassPathResource("csv/stockinfo.csv"))

.delimited()

.names(new String[] {

"stockId",

"stockName",

"stockPrice",

"yearlyHigh",

"yearlyLow",

"address",

"sector",

"market"

})

.targetType(StockInfo.class)

.build();

}

@Bean

public StockInfoProcessor stockInfoProcessor() {

return new StockInfoProcessor();

}

@Bean

public FlatFileItemWriter < String > writer() {

return new FlatFileItemWriterBuilder < String > ()

.name("stockInfoItemWriter")

.resource(new FileSystemResource(

"target/output.txt"))

.lineAggregator(new PassThroughLineAggregator < > ()).build();

}

@Bean

public JobExecutionListener listener() {

return new SpringBatchJobCompletionListener();

}

}The Job and steps inside it will require a JobRepository. JobRepository in Spring batch takes care of all the CRUD (create, read, update, and delete) operations and ensures persistence. It does this for JobLauncher, Job, and Step. In the above code, we are creating a Job with one Step and that Step in-turn has an ItemReader, an ItemProcessor, and an ItemWriter.

1.1. Restartability

We know that when we launch a Job, it will create a JobInstance and it will have a JobExecution exist for it. So, if a JobInstance has a JobExecution and we launch it for a second time, it’s a restart. In an ideal world, any job should be able to start from where it left off; in simple words, the job must maintain the state.

It is up to the developer and designer to decide this behavior. If they wish, they can control this behavior while creating the JobInstance. We can control this restart-able behavior using preventRestart property in the JobBuilderFactory.

@Bean

public Job processJob() {

return jobBuilderFactory.get("stockpricesinfojob")

.incrementer(new RunIdIncrementer())

.listener(new SpringBatchJobExecutionListener())

.flow(StockPricesInfoStep())

.end()

.build();

}If we set up this property for a JobInstance, an attempt to restart the JobInstance will throw JobRestartException.

1.2. Intercepting Job Execution

We can intercept the JobExecution to get notified of the various events in its life-cycle and once we do that, we can write our custom logic. We do this by using JobExecutionListener interface. This interface has two methods beforeJob() and afterJob().

public interface JobExecutionListener {

void beforeJob(JobExecution var1);

void afterJob(JobExecution var1);

}We can add a JobListener to our JobInstance while creating it. Please see the code below where we have added our implementation SpringBatchJobCompletionListener.

@Bean

public Job processJob() {

return jobBuilderFactory.get("stockpricesinfojob ")

.preventRestart()

.incrementer(new RunIdIncrementer())

.listener(new SpringBatchJobCompletionListener())

.flow(orderStep1())

.end()

.build();

}Please note that afterJob() will be invoked irrespective of JobExecution status. If we wish to write a custom code based on these statuses, we can do so in this listener class. Please have a look at the code below to understand it further.

package com.javadevjournal.springbootbatch.listener;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.batch.core.BatchStatus;

import org.springframework.batch.core.JobExecution;

import org.springframework.batch.core.listener.JobExecutionListenerSupport;

public class SpringBatchJobCompletionListener extends JobExecutionListenerSupport {

Logger logger = LoggerFactory.getLogger(SpringBatchJobCompletionListener.class);

@Override

public void beforeJob(JobExecution jobExecution) {

logger.info("BEFORE BATCH JOB STARTS");

}

@Override

public void afterJob(JobExecution jobExecution) {

if (jobExecution.getStatus() == BatchStatus.COMPLETED) {

logger.info("BATCH JOB COMPLETED SUCCESSFULLY");

} else if (jobExecution.getStatus() == BatchStatus.FAILED) {

logger.info("BATCH JOB FAILED");

}

}

}JobExecution (BatchStatus) Statuses:

- COMPLETED

- STARTING

- STARTED

- STOPPING

- STOPPED

- FAILED

- ABANDONED

- UNKNOWN

1.3. JobParametersValidator

When we make an instance of Job interface using AbstractJob implementation, we have an option to declare and define a validator for JobParameters. This is useful when we want to ensure that Job is getting started with all the mandatory and required parameters.

One can use DefaultJobParametersValidator implementation of interface JobParametersValidator or implement our custom validators. In the code below you can see that we are using the JobBuilderFactory and declaring a validator within it.

@Bean

public Job processJob() {

return jobBuilderFactory.get("stockpricesinfojob ")

.validator(validateParameters())

.incrementer(new RunIdIncrementer())

.listener(listener())

.flow(orderStep1())

.end()

.build();

}

private JobParametersValidator validateParameters() {

//TODO – Add Validations here

return null;

}

2. Configuring a JobRepository

When we use annotation @EnableBatchProcessing in our main spring boot class, it provides a JobRepository to us. There might be a situation where we want to configure our JobRepository.

As stated earlier in this article, JobRepository in Spring batch takes care of all the CRUD (create, read, update, and delete) operations and ensures persistence. It does this for JobLauncher, Job, and Step. To configure the JobRepository, we can implement the interface BatchConfigurer and customize it as per our need using Java configuration. We will need to provide a DataSource for it.

public interface BatchConfigurer {

JobRepository getJobRepository() throws Exception;

PlatformTransactionManager getTransactionManager() throws Exception;

JobLauncher getJobLauncher() throws Exception;

JobExplorer getJobExplorer() throws Exception;

}

public class SpringBatchBasicRepository implements BatchConfigurer {

@Autowired

private DataSource dataSource;

@Autowired

private PlatformTransactionManager transactionManager;

@Override

public JobRepository getJobRepository() throws Exception {

JobRepositoryFactoryBean factory = new JobRepositoryFactoryBean();

factory.setDataSource(dataSource);

factory.setTransactionManager(transactionManager);

factory.setIsolationLevelForCreate("ISOLATION_SERIALIZABLE");

factory.setTablePrefix("BATCH_");

factory.setMaxVarCharLength(1200);

return factory.getObject();

}

}Apart from DataSource and TransactionManager, other options are not mandatory and if we don’t set them, spring batch will assign default values to it.

2.1. Transaction Configuration for the JobRepository

It requires the transaction configuration to set isolation levels for all the transactions we are performing. It sets the default isolation level to SERIALIZABLE and we can change it to other options like READ_COMMITTED, READ_UNCOMMITTED, and REPEATABLE_READ based on our requirements.

2.2. Changing the Table Prefix

We can change the table prefix by using the setter setTablePrefix( “desired prefix”). By default, they set it as “BATCH_” and we will see tables like BATCH_JOB* and BATCH_STEP*. A principled reason to have different prefixes is with different schema names. Schema names come before table names.

@Override

public JobRepository getJobRepository() throws Exception {

JobRepositoryFactoryBean factory = new JobRepositoryFactoryBean();

factory.setDataSource(dataSource);

factory.setTransactionManager(transactionManager);

factory.setIsolationLevelForCreate("ISOLATION_SERIALIZABLE");

factory.setTablePrefix("SCHEMA_OWNER.BATCH_");

factory.setMaxVarCharLength(1200);

return factory.getObject();

}

2.3. In-Memory Repository

Where we don’t want to persist the data in the database, Spring Batch provides us with an in-memory map version of the JobRepository.

@Override

public JobRepository getJobRepository() throws Exception {

MapJobRepositoryFactoryBean factory = new MapJobRepositoryFactoryBean();

factory.setTransactionManager(transactionManager);

return factory.getObject();

}

3. Configuring a JobLauncher

Among all the implementations of JobLauncher, SimpleJobLauncher is the most basic one, and it just requires JobRepository.

@Override

public JobLauncher getJobLauncher() throws Exception {

SimpleJobLauncher jobLauncher = new SimpleJobLauncher();

jobLauncher.setJobRepository(getJobRepository());

jobLauncher.afterPropertiesSet();

return jobLauncher;

}

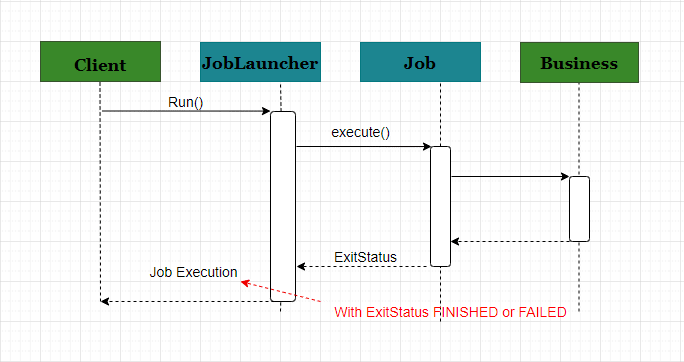

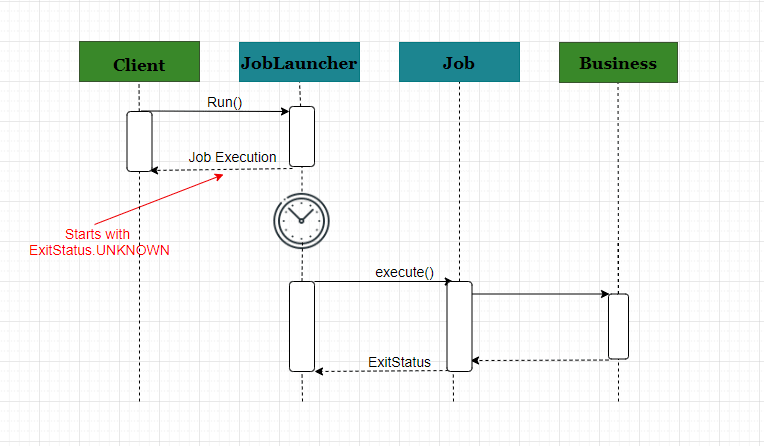

3.1. JobLauncher Sequence Diagram

Synchronous

It is good for non-HTTP cases and straight-forward.

Asynchronous

It is good for HTTP requests as we shouldn’t keep an HTTP request open for long.

4. Running a Job

To run a Spring Batch Job, we require two things, the Job and a JobLauncher. It can contain the Job and JobLauncher in the same context or they can be part of different contexts.

If we launch a Job from the command line, we will have a new JVM for each Job and each Job will have its own JobLauncher. This would be synchronous. If we are running our Job within a web container (in HttpRequest), it will have only one JobLauncher. Here, the JobLauncher would be asynchronous.

4.1. CommandLineJobRunner

As we have seen in previous articles and in this, we require a main class to start the JVM. We have used Spring Boot’s main class to start our Job and run that. Other ways to do the same thing include CommandLineJobRunner from Spring Batch.

CommandLineJobRunner does the following 4 tasks.

- Load the ApplicationContext.

- Convert the command line arguments to JobParameters.

- Find the Job based on command line arguments.

- From the ApplicationContext, use the JobLauncher to launch the job.

Everything depends on the arguments being passed to it. CommandLineJobRunner arguments are:

- JobPath Java or XML config used as ApplicationContext.

- JobName The job to be run.

After these two arguments in order, we can pass the JobParameters in the “name=value” format.

<bash$ java CommandLineJobRunner com.javadevjournal.springbootbatch.config.SpringBatchConfig javadevjournaljob schedule.date(date)=2020/06/20

4.2. ExitCodes

We know that our spring batch running via CommandLineJobRunner will use one of the schedulers, and this operating system process will return some exit codes based on the outcome of the job. Usually, it returns 0 for success and 1 for failure.

With Spring Batch Jobs, we find that these 2 exit codes may not serve the purpose well because there can be some complex scenarios(e.g. if a job JobA returns 4, launch JobB). To handle this, Spring Batch has a class called ExitStatus, and it has an exit code property that is set up by the developer and gets returned as part of the JobExecution from JobLauncher.

public class ExitStatus implements Serializable, Comparable < ExitStatus > {

public static final ExitStatus UNKNOWN = new ExitStatus("UNKNOWN");

public static final ExitStatus EXECUTING = new ExitStatus("EXECUTING");

public static final ExitStatus COMPLETED = new ExitStatus("COMPLETED");

public static final ExitStatus NOOP = new ExitStatus("NOOP");

public static final ExitStatus FAILED = new ExitStatus("FAILED");

public static final ExitStatus STOPPED = new ExitStatus("STOPPED");

}Our runner uses ExitCodeMapper interface to convert this literal value to a number.

package org.springframework.batch.core.launch.support;

public interface ExitCodeMapper {

int JVM_EXITCODE_COMPLETED = 0;

int JVM_EXITCODE_GENERIC_ERROR = 1;

int JVM_EXITCODE_JOB_ERROR = 2;

String NO_SUCH_JOB = "NO_SUCH_JOB";

String JOB_NOT_PROVIDED = "JOB_NOT_PROVIDED";

int intValue(String var1);

}

Summary

In this article Spring Batch Job Configuring, we discussed and covered the following items.

- We learned to configure a spring batch job and its various variants.

- Configure a spring batch repository and its various variants.

- How to set up a spring batch job launcher and its synchronous/asynchronous version.

- Run a spring batch job.

The source code for this application is available on GitHub.

Hello Brilliant article. I have a question though.

are the JobParamaters predefined in the framework? For e.g. “schedule.date” How can you pass custom job paramters

Not sure if I understood your question correctly, but there are few values which are meant unmodifiable by design. We have few ways to pass the parameters.